Flexible Rendering and Lookdev Pipelines with Malt + Godot

So! Since my last article on Godot Pipelines, there has been a lot of things, including the start of my PhD (on real-time non-photorealistic rendering!). One of them is the release of Malt 1.0 Preview, which is super useful for me in all kinds of ways, and today we’re going to look at how to use it with Godot to quickly create rendering pipelines, and most importantly have the same rendering in both Blender and Godot !

I cannot overstate how useful it is to have both use the same rendering code. Faster iterations, less work, better results, better tools… Non-photorealistic rendering really needs that kind of flexibility as there’s no standard, and by definition all renders are going to be different.

Today we’re gonna set up a small two pass render pipeline like the last time to see how it’s done. You can find the code here.

UPDATE November 1st 2023: Malt has got some updates which changed the interface, I’ve given some fixed code here but haven’t updated the repo yet.

Creating the Malt Pipeline

The main feature we’re going to be interested in here is custom render pipelines, which allows us complete control over what is rendered. This is where Malt will create the buffers and basic parameters, and where we can use our OpenGL calls. Let’s start by taking the Mini Pipeline as a base, and add stuff one at a time.

The main functions are as follows:

__init__: Sets up our parameters and arguments.compile_material_from_source: Compiles the shaders.setup_render_targets: Sets up our buffers and FBOs (Render Targets). This is the Malt equivalent of making new viewports in Godot.do_render: Will do the actual rendering calls. This would be where we pass parameters and buffers to the passes.

Frame Buffer Objects (FBOs) / Render Targets: This is a collection of buffers that a shader will write to. While in Godot, we can only output to one buffer, in regular rendering we can write to several. This is actually how deferred shading works: write all your parameters in a first pass to several buffer, then sample them all in a second pass to compute our final color.

|

|

Finally, here’s our mesh shader. The only thing it will do is render some data for the second pass, here by filling the red channel.

|

|

Finally, set the color profile to linear in the film panel (set Display Device to None).

Adding Lights

For now we don’t have anything to compute lighting, so let’s change that. As we’re doing low level code, we usually need to pass each light parameter manually, but Malt has a few helpers we’re going to use since we don’t have anything special with the lights themselves.

The following code will do two things:

- Setup the lights in the

__init__function. - Load the current frame’s light data into the UBO of the first render pass.

Uniform Buffer Objects (UBOs): This is the collection of the uniforms (think of them as parameters for the shader) we will send to the shader. Every shader will define what uniforms it will have, and the pipeline will set their value before rendering.

|

|

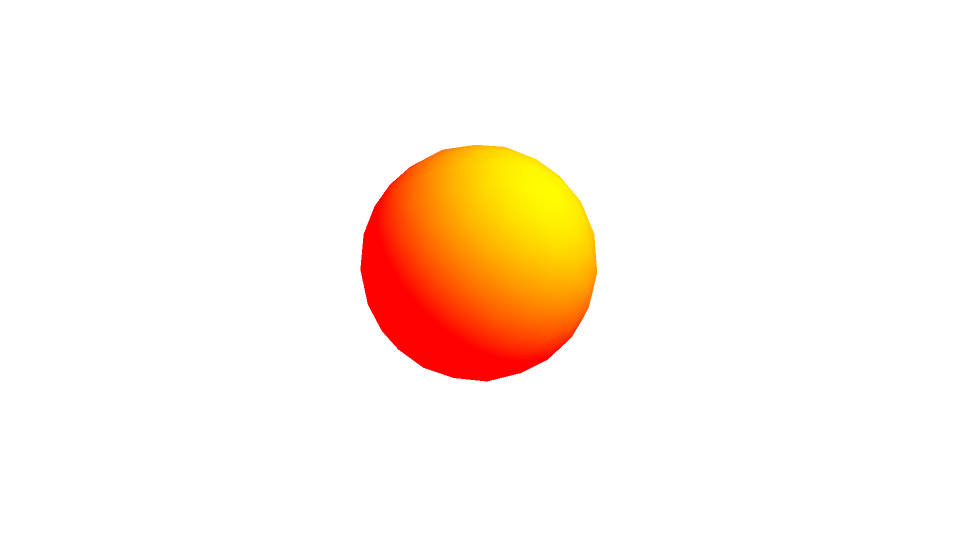

Then we update the shader to take those lights, and with it compute the lighting we will put in the green channel.

|

|

Render Targets

Next step is creating our buffers for the passes. We’ll simply rename the first one and add a second, no need for the depth pass.

|

|

Creating the second pass

Now the trickier part. To have it render correctly we will have to both register a new material for the pass in the __init__ function, and use it in the do_render function. Then, we will pass the result of the previous render to it as a uniform. Finally, we create the shader for the second pass.

|

|

|

|

Creating the Godot Pipeline

This is what we did in the last article on Godot Pipelines. I prefer doing the tests in Malt since it’s faster for prototyping and has full OpenGL support, but since Godot has its own language you should keep its limitations in mind. Oddlib has evolved a bit so I’ll give the updated code here:

|

|

|

|

|

|

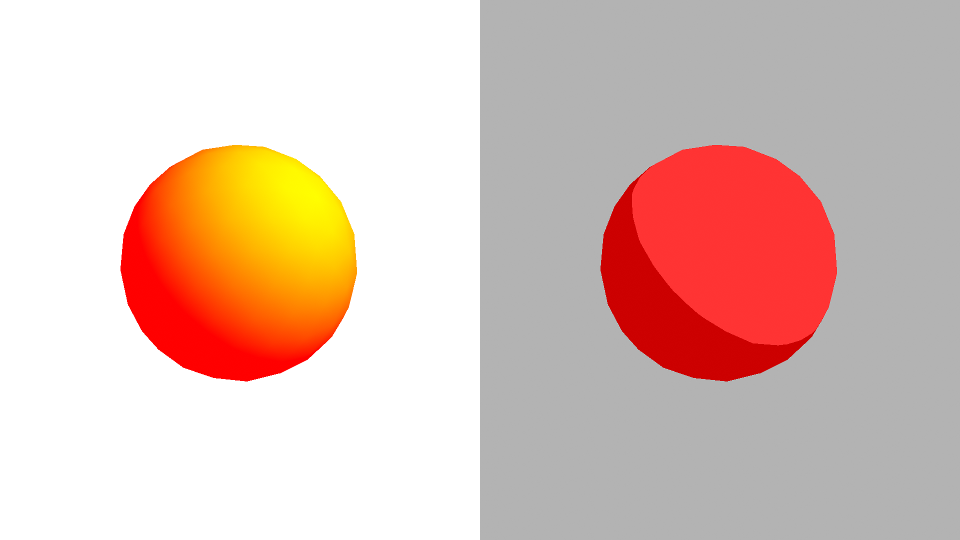

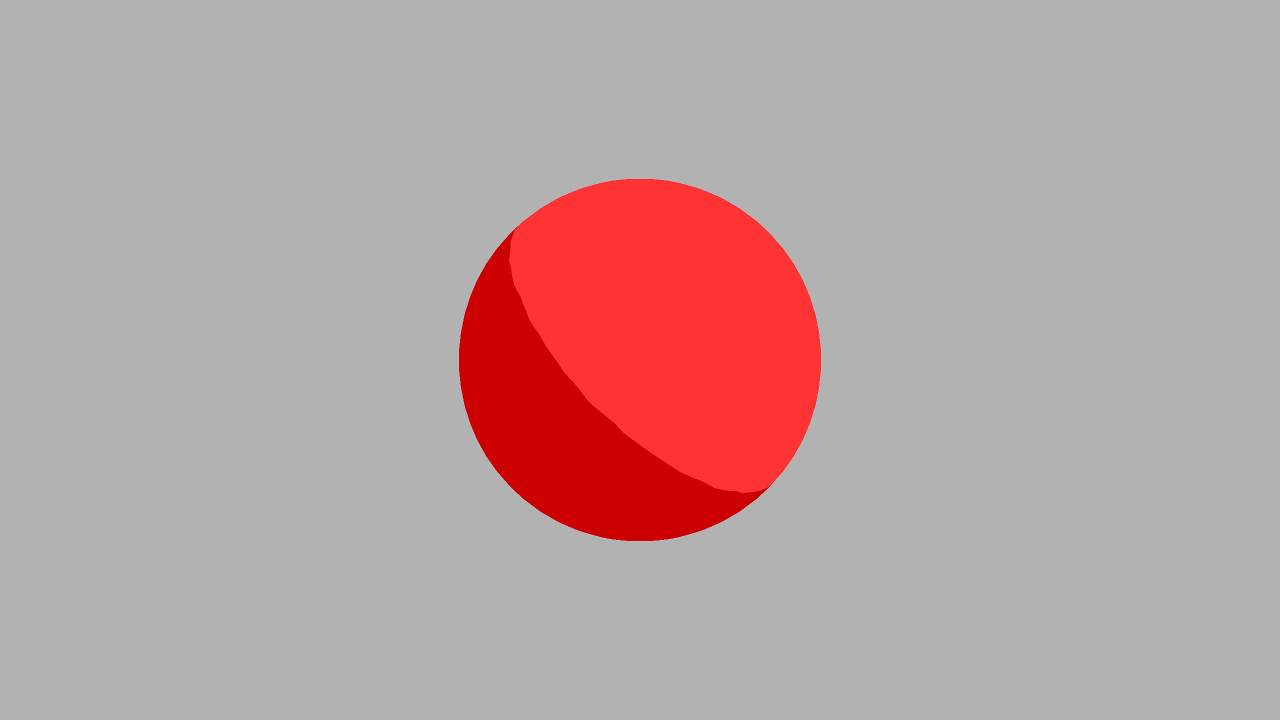

Since this shader does the same things as the GLSL shader, and the pipeline has the same ordering of passes, this gives us identical or near-identical results depending on the parameters we use (don’t forget to activate the linear color profile in Blender’s film panel).

Where to go from here ?

So, now that we have seen how to set up a simple pipeline, you can apply it to your project! I think we can go even further by using the same shader for both, although that would require a preprocessor and a lot of #IFDEFs.

This has already been super useful for me, so I’ll probably continue to dig on the subject. Join the discord if you want to stay up to date!